DeepFakes are a fascinating and relatively recent development in deep learning. This technology synthesizes a completely new face after being trained on a dataset of “real humans”.

Deepfakes have been used for less-than-savoury purposes. Now, they are being used for a range of positive and beneficial purposes. “Safe-fakes” anonymize image data prior to training deep learning models. Examples include autonomous vehicles, video anonymization, anonymization of patient health training data and even the creation of artwork.

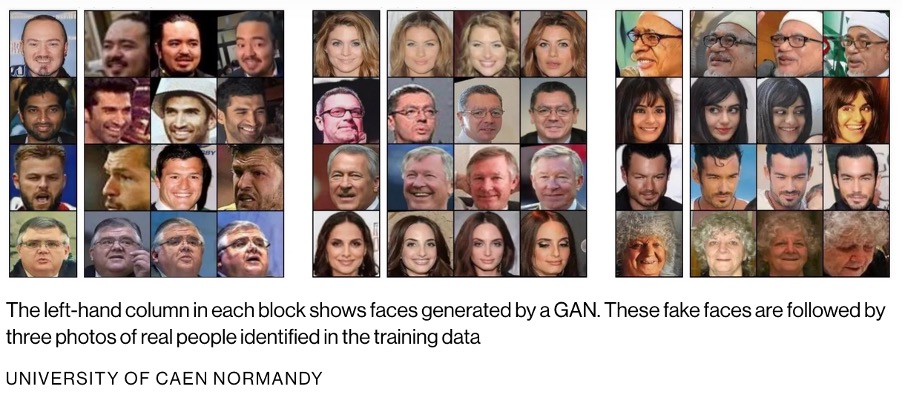

Anonymization often uses Generative Adversarial Networks (GANs) which, to date, have been thought to be effective in concealing the “real human” training images.

This raises a critical question: “How private is the data used to train deepfakes?”

A recent study by the University of Caen in France and work by Nvidia found that AI fake-face generators can be rewound to reveal the real faces on which they were trained. The University of Caen team detected private training data in the output of deep-learning models. The Nvidia team, taking a different approach, uncovered private data by recreating the input by working backwards through the neural net.

While the effectiveness of these “de-faking” approaches may be limited for deep models with multiple layers of neural networks, they nevertheless have uncovered a serious data privacy issue which may expose businesses, that rely on these anonymization technologies, to privacy challenges. Boards need to be aware of these risks.

It would be good to hear your views on this topic. Have you come across other de-anonymization techniques or solutions for overcoming this issue?